Secure Remote MCP Servers With Entra ID And Azure API Management

Table of Contents

One of the most in-demand pieces of guidance needed lately has been the answer to one very specific question - “Can I host a remote Entra ID-protected MCP server, and if so, how do I do that?” Well, you can, and in this blog post I will show you how.

It’s worth noting that the work on this is not coming just from me - I want to extend a huge thank you to Pranami Jhawar, Julia Kasper, and Annaji Sharma Ganti, who helped extensively to both debug and test the sample, craft API Management policies, and offer Azure subscriptions that I can test against.

The actual sample that I will be talking about here is a derivative work of a recently-released Azure API Management demo.

Prerequisites #

Before we get started, I should mention that you will need the following:

- An Azure subscription. We’re going to be using Azure Functions and Azure API Management here.

- An Entra ID tenant. If you have an Azure subscription, you should be golden.

- Azure Developer CLI installed. You can download it from the official website.

- Node.js. Also obtainable for free from the official website.

- Model Context Protocol Inspector. It’s an open-source tool we’ll use to test our protected MCP server, since most MCP clients don’t yet implement the authorization specification.

Infrastructure #

One of the big pain points developers have is implementing anything authentication and authorization-related. If you don’t have the security expertise, it’s inherently painful and risky. You might just misconfigure something and end up exposing all your data to people you don’t want to expose all that data to.

To make this a bit easier for developers, we put together a sample that shows how the authorization problem can be delegated entirely to Entra ID and Azure API Management. Before I go into the details, I’ll mention that you can skip my write-up altogether and just rely on our sample that does everything for you:

Check out the codeThe project does a few things of interest, that we’ll walk through here:

- Spin up a client Entra ID application registration that will be used for user authentication.

- Create another Entra ID application registration that will be used as the “resource” gate (that is - the first Entra ID app will request scopes related to this second app, which exposes a registered API).

- Put the Python code that represents our MCP server (it’s using the Azure Functions Python SDK) inside Azure Functions.

- Spin up a bunch of required Azure resources to gate the Azure Functions deployment behind Azure API Management.

- As part of that, also put policies in place that ensure that Entra ID tokens never actually make it to the client - all the client gets is a session identifier.

But if you have a clean separation between the resource and the client app, doesn’t it mean that the Entra ID token issued to the first app can actually make it to the client?

Kind of - if we would be using a public client application registration that kicks-off the authorization flow, then absolutely. However, the client application that we have hosted in Azure is a confidential client, which means its tokens should never be exposed to the client side. That’s OK, though - session mapping still works perfectly well!

In our code, we use two things that might look a little foreign to the readers of this blog: Azure API Management policies and Bicep infrastructure definition files. The policies are defined as XML files and Bicep files are, well, *.bicep. You can find them all in the infra directory. There’s a lot there and it may seem a bit confusing, but worry not - it’s there mainly because we built the sample to be use-ready. There are a lot of things you might not need for what you want to build, so feel free to trim it down a bit.

In the meantime, let’s explore some of the components that we have in play here.

Components #

Code #

The actual code of the Azure Function that is responsible for being our MCP server is located in function_app.py. Its purpose is remarkably straightforward compared to other MCP servers. It does one thing, and it does it well - retrieves information about the currently authenticated user from Microsoft Graph. I mean, look at the code:

import json

import logging

import os

import requests

import msal

import traceback

import jwt

from jwt.exceptions import PyJWTError

import azure.functions as func

app = func.FunctionApp(http_auth_level=func.AuthLevel.FUNCTION)

# This variable is set in Bicep and is automatically provisioned.

application_uami = os.environ.get('APPLICATION_UAMI', 'Not set')

application_cid = os.environ.get('APPLICATION_CID', 'Not set')

application_tenant = os.environ.get('APPLICATION_TENANT', 'Not set')

managed_identity = msal.UserAssignedManagedIdentity(client_id=application_uami)

mi_auth_client = msal.ManagedIdentityClient(managed_identity, http_client=requests.Session())

def get_managed_identity_token(audience):

token = mi_auth_client.acquire_token_for_client(resource=audience)

if "access_token" in token:

return token["access_token"]

else:

raise Exception(f"Failed to acquire token: {token.get('error_description', 'Unknown error')}")

def get_jwks_key(token):

"""

Fetches the JSON Web Key from Azure AD for token signature validation.

Args:

token: The JWT token to validate

Returns:

tuple: (signing_key, error_message)

- signing_key: The public key to verify the token, or None if retrieval failed

- error_message: Detailed error message if retrieval failed, None otherwise

"""

try:

# Get the kid and issuer from the token

try:

header = jwt.get_unverified_header(token)

if not header:

return None, "Failed to parse JWT header"

except Exception as e:

return None, f"Invalid JWT header format: {str(e)}"

kid = header.get('kid')

if not kid:

return None, "JWT header missing 'kid' (Key ID) claim"

try:

payload = jwt.decode(token, options={"verify_signature": False})

if not payload:

return None, "Failed to decode JWT payload"

except Exception as e:

return None, f"Invalid JWT payload format: {str(e)}"

issuer = payload.get('iss')

if not issuer:

return None, "JWT payload missing 'iss' (Issuer) claim"

expected_issuer = f"https://sts.windows.net/{application_tenant}/"

if issuer != expected_issuer:

return None, f"JWT issuer '{issuer}' does not match expected issuer '{expected_issuer}'"

jwks_uri = f"https://login.microsoftonline.com/{application_tenant}/discovery/v2.0/keys"

try:

resp = requests.get(jwks_uri, timeout=10)

if resp.status_code != 200:

return None, f"Failed to fetch JWKS: HTTP {resp.status_code} - {resp.text[:100]}"

jwks = resp.json()

if not jwks or 'keys' not in jwks or not jwks['keys']:

return None, "JWKS response is empty or missing 'keys' array"

except requests.RequestException as e:

return None, f"Network error fetching JWKS: {str(e)}"

except json.JSONDecodeError as e:

return None, f"Invalid JWKS response format: {str(e)}"

signing_key = None

for key in jwks['keys']:

if key.get('kid') == kid:

try:

signing_key = jwt.algorithms.RSAAlgorithm.from_jwk(json.dumps(key))

break

except Exception as e:

return None, f"Failed to parse JWK for kid='{kid}': {str(e)}"

if not signing_key:

return None, f"No matching key found in JWKS for kid='{kid}'"

return signing_key, None

except Exception as e:

return None, f"Unexpected error getting JWKS key: {str(e)}"

def validate_bearer_token(bearer_token, expected_audience):

"""

Validates a JWT bearer token against the expected audience and verifies its signature.

Args:

bearer_token: The JWT token to validate

expected_audience: The expected audience value

Returns:

tuple: (is_valid, error_message, decoded_token)

- is_valid: boolean indicating if the token is valid

- error_message: error message if validation failed, None otherwise

- decoded_token: the decoded token if validation succeeded, None otherwise

"""

if not bearer_token:

return False, "No bearer token provided", None

try:

logging.info(f"Validating JWT token against audience: {expected_audience}")

signing_key, key_error = get_jwks_key(bearer_token)

if not signing_key:

return False, f"JWT key retrieval failed: {key_error}", None

try:

decoded_token = jwt.decode(

bearer_token,

signing_key,

algorithms=['RS256'],

audience=expected_audience,

options={"verify_aud": True}

)

logging.info(f"JWT token successfully validated. Token contains claims for subject: {decoded_token.get('sub', 'unknown')}")

return True, None, decoded_token

except jwt.exceptions.InvalidAudienceError:

return False, f"JWT has an invalid audience. Expected: {expected_audience}", None

except jwt.exceptions.ExpiredSignatureError:

return False, "JWT token has expired", None

except jwt.exceptions.InvalidSignatureError:

return False, "JWT has an invalid signature", None

except PyJWTError as jwt_error:

error_message = f"JWT validation failed: {str(jwt_error)}"

logging.error(f"JWT validation error: {error_message}")

return False, error_message, None

except Exception as e:

error_message = f"Unexpected error during JWT validation: {str(e)}"

logging.error(error_message)

return False, error_message, None

cca_auth_client = msal.ConfidentialClientApplication(

application_cid,

authority=f'https://login.microsoftonline.com/{application_tenant}',

client_credential={"client_assertion": get_managed_identity_token('api://AzureADTokenExchange')}

)

@app.generic_trigger(

arg_name="context",

type="mcpToolTrigger",

toolName="get_graph_user_details",

description="Get user details from Microsoft Graph.",

toolProperties="[]",

)

def get_graph_user_details(context) -> str:

"""

Gets user details from Microsoft Graph using the bearer token.

Args:

context: The trigger context as a JSON string containing the request information.

Returns:

str: JSON containing the user details from Microsoft Graph.

"""

token_error = None

user_data = None

try:

logging.info(f"Context type: {type(context).__name__}")

try:

context_obj = json.loads(context)

arguments = context_obj.get('arguments', {})

bearer_token = None

logging.info(f"Arguments structure: {json.dumps(arguments)[:500]}")

if isinstance(arguments, dict):

bearer_token = arguments.get('bearerToken')

if not bearer_token:

logging.warning("No bearer token found in context arguments")

token_acquired = False

token_error = "No bearer token found in context arguments"

else:

expected_audience = f"api://{application_cid}"

is_valid, validation_error, decoded_token = validate_bearer_token(bearer_token, expected_audience)

if is_valid:

result = cca_auth_client.acquire_token_on_behalf_of(

user_assertion=bearer_token,

scopes=['https://graph.microsoft.com/.default']

)

else:

token_acquired = False

token_error = validation_error

result = {"error": "invalid_token", "error_description": validation_error}

if "access_token" in result:

logging.info("Successfully acquired access token using OBO flow")

token_acquired = True

access_token = result["access_token"]

token_error = None

try:

headers = {

'Authorization': f'Bearer {access_token}',

'Content-Type': 'application/json'

}

graph_url = 'https://graph.microsoft.com/v1.0/me'

response = requests.get(graph_url, headers=headers)

if response.status_code == 200:

user_data = response.json()

logging.info("Successfully retrieved user data from Microsoft Graph")

else:

logging.error(f"Failed to get user data: {response.status_code}, {response.text}")

token_error = f"Graph API error: {response.status_code}"

except Exception as graph_error:

logging.error(f"Error calling Graph API: {str(graph_error)}")

token_error = f"Graph API error: {str(graph_error)}"

else:

token_acquired = False

token_error = result.get('error_description', 'Unknown error acquiring token')

logging.warning(f"Failed to acquire token using OBO flow: {token_error}")

except Exception as e:

token_acquired = False

token_error = str(e)

logging.error(f"Exception when acquiring token: {token_error}")

try:

response = {}

if user_data:

response = user_data

response['success'] = True

else:

response['success'] = False

response['error'] = token_error or "Failed to retrieve user data"

logging.info(f"Returning response: {json.dumps(response)[:500]}...")

return json.dumps(response, indent=2)

except Exception as format_error:

logging.error(f"Error formatting response: {str(format_error)}")

return json.dumps({

"success": False,

"error": f"Error formatting response: {str(format_error)}"

}, indent=2)

except Exception as e:

stack_trace = traceback.format_exc()

return json.dumps({

"error": f"An error occurred: {str(e)}\n{stack_trace}",

"stack_trace": stack_trace,

"raw_context": str(context)

}, indent=2)

I appreciate your patience in getting through this wall of text, because it truly is substantial. While the code might look intimidating at first glance, it’s actually quite straightforward. The entirety of the snippet is essentially an implementation of one MCP server tool (get_graph_user_details). There are a few helper functions here that take more space than the actual tool logic, such as:

get_managed_identity_token- gets us the Managed Identity access token that we will need for secretless operation.validate_bearer_token- validates an incoming bearer token that is destined for our MCP server.get_jwks_key- gets the keys used to sign the JWT token issued to our MCP server. This is, in turn, used byvalidate_bearer_tokento validate that the token is in the right shape.

There are a few other things worth noting here, because remember - it’s not just that we’re running an Azure Function. We’re running it behind Azure API Gateway. When an MCP client connects to our MCP server for the first time, we want to put the user through the authorization flow.

What that means is that that our Azure API Management “gateway” will be responsible for:

- Routing the authorization requests to Entra ID.

- Checking that there was a token obtained from Entra ID.

- Issuing a session identifier and cache the token.

- Passing the session identifier to the client, who in turn will use it as a token in its

Authorizationheader (remember - no Entra ID tokens on the client).

However, there is an interesting challenge here that we need to deal with - when I am using mcpToolTrigger, I don’t actually get any authorization context inside the Azure Function instance that I’m running (as you would in any other scenario). That’s a bit of a bummer, because we need it to get user details from Microsoft Graph.

But before we talk about that, I think it’s worth outlining what the end-to-end user flow should be here - it will give you a better picture of what we need to do:

Like staring at a pile of thrown-around LEGO bricks, am I right? Let me explain what is happening with the infrastructure we have at hand, at a very high level.

- The MCP client will attempt to connect to the MCP server, which is gated by Azure API Management (APIM).

- APIM, in turn, will help the client kick off the authorization flow with Entra ID because the server is protected.

- Entra ID will issue a token with the MCP server as the audience and pass it to APIM.

- APIM will cache the token and in turn produce a session identifier that it will package up as a JWT and return to the MCP client.

- The client, now in possession of a token representing the session, can request data from the MCP server (behind APIM).

- APIM will verify the token, and then request data from Azure Functions if things are as expected, but also attach the bearer token from step (3) so that the server has the actual Entra ID credentials to operate with.

- Inside Azure Functions, the MCP server logic will kick in. For example, if a tool is invoked, the MCP server will validate the inbound bearer token (e.g., make sure that it’s issued to the right audience).

- If the token passes the checks, it will be used as an assertion with a request to Entra ID to exchange it for another token, this time to access Microsoft Graph on behalf of the user.

- Entra ID will return the token back to our running Azure Function (at this point, APIM is no longer relevant for the exchange).

- Our code running in Azure Functions will now request data from Microsoft Graph with an appropriate token.

That’s it. Ten steps. Once the data from Microsoft Graph is returned, it will flow back to the Azure Function code, which will then route it all the way back to the client.

As an added bonus, you might’ve noticed that in the Python code above there are no references to any client secrets. That’s because we now have the ability to go completely secretless for Entra ID applications running on the server, which is a good thing - secrets can be stolen, and we want to minimize the risk of that happening. Instead of a secret, we use an associated managed identity as a federated identity credential with our application registration. This allows us to get a managed identity access token and then use it as a client assertion, which helps establish the application identity server-side.

I digress, though. Going back to the original challenge that I mentioned above - we are not able to directly pass the request context into the tool. What can we do about it? As it turns out, this is where Azure API Management policies come in handy. This is what the next section is about.

Policies and infrastructure definitions #

Consider policies as nothing other than “behavior configuration” for an API. Because Azure API Management acts as a “gateway” (I am using this term very loosely here) to our hosted MCP server Azure Function, it controls what information is passed through. For example, take a look inside apim-oauth, at the oauth-callback.policy.xml. It’s responsible for building out the callback structure for handling the redemption of an authorization code with Entra ID and then producing a “session” for the MCP client (you might feel like this is oddly familiar if you’ve ever encountered the BFF pattern before).

Given the amount of flexibility that policies offer us, even if we don’t have the ability to pass the request context to the tool, we can amend what goes from the MCP client to the MCP server in transit. For that, take a look at mcp-api.policy.xml. This is the relevant bit:

<choose>

<when condition="@(context.Request.Body != null && context.Request.Body.As<JObject>(preserveContent: true) != null)">

<!-- If body exists and is valid JSON, add token to the params section -->

<set-body>@{

var requestBody = context.Request.Body.As<JObject>(preserveContent: true);

if (requestBody["params"] != null && requestBody["params"].Type == JTokenType.Object)

{

var paramsObj = (JObject)requestBody["params"];

// Look for arguments section or create it if it doesn't exist

if (paramsObj["arguments"] != null && paramsObj["arguments"].Type == JTokenType.Object)

{

// Add bearer token to the arguments section

var argumentsObj = (JObject)paramsObj["arguments"];

argumentsObj["bearerToken"] = (string)context.Variables.GetValueOrDefault("bearer");

}

else if (paramsObj["arguments"] == null)

{

// Create arguments section if it doesn't exist

paramsObj["arguments"] = new JObject();

((JObject)paramsObj["arguments"])["bearerToken"] = (string)context.Variables.GetValueOrDefault("bearer");

}

else

{

// Fallback: add directly to params if arguments exists but is not an object

paramsObj["bearerToken"] = (string)context.Variables.GetValueOrDefault("bearer");

}

}

else

{

// If params section doesn't exist or isn't an object, just add to root as fallback

requestBody["bearerToken"] = (string)context.Variables.GetValueOrDefault("bearer");

}

return requestBody.ToString();

}</set-body>

</when>

<otherwise>

<!-- If no body or not JSON, create a new JSON body with the token -->

<set-body>@{

var newBody = new JObject();

newBody["bearerToken"] = (string)context.Variables.GetValueOrDefault("bearer");

return newBody.ToString();

}</set-body>

</otherwise>

</choose>

We know the structure of the input that tool expects - it’s a JSON blob that has a params with a nested arguments array. That’s exactly where we can inject the resolved bearer token. Since client passes a session identifier to APIM, that in turn obtains the bearer token associated with said session and passes it to the tool.

Wait, wait, wait. Isn’t it a bad practice to expose any credentials to LLMs? I thought we should not be making them available to those because of potential exfiltration risks.

The good news is that in this approach any of the tokens are never actually exposed to the LLMs themselves. The only thing we’re doing is - server-side, inject the token as “context” to the tool, which can then operate with that token as it sees fit (e.g., use token exchange to obtain another token). The credential is never actually hitting the client in any capacity (unless you explicitly return the token in the result, which you shouldn’t do, ever).

For our authorization logic to actually work, we will also need to make sure that we have properly registered and configured Entra ID application registrations. Worry not, though, because that can be done entirely in Bicep:

module entraResourceApp './entra-resource-app.bicep' = {

name: 'entraResourceApp'

params:{

entraAppUniqueName: entraResourceAppUniqueName

entraAppDisplayName: entraResourceAppDisplayName

userAssignedIdentityPrincipleId: entraAppUserAssignedIdentityPrincipleId

}

}

module entraClientApp './entra-client-app.bicep' = {

name: 'entraClientApp'

params:{

entraAppUniqueName: entraClientAppUniqueName

entraAppDisplayName: entraClientAppDisplayName

resourceAppId: entraResourceApp.outputs.entraAppId

resourceAppScopeId: entraResourceApp.outputs.entraAppScopeId

apimOauthCallback: '${apimService.properties.gatewayUrl}/oauth-callback'

userAssignedIdentityPrincipleId: entraAppUserAssignedIdentityPrincipleId

}

}

Because Bicep allows extensive parameterization of various infrastructure components, we can relatively easily set up the apps in the way that we need. As I mentioned earlier, we have two applications - one that is the client app (getting a token for our MCP server), and another that is the resource app (used by the server to get a token for Microsoft Graph). They both depend on each other - the client app needs to be set up to accept scopes from the resource app.

I’d recommend you go through the Bicep files in the sample to see what resources are stood up for the sample.

Deploying and testing #

Now that you’re familiar with the overall architecture, let’s walk through the process of deploying and testing the sample.

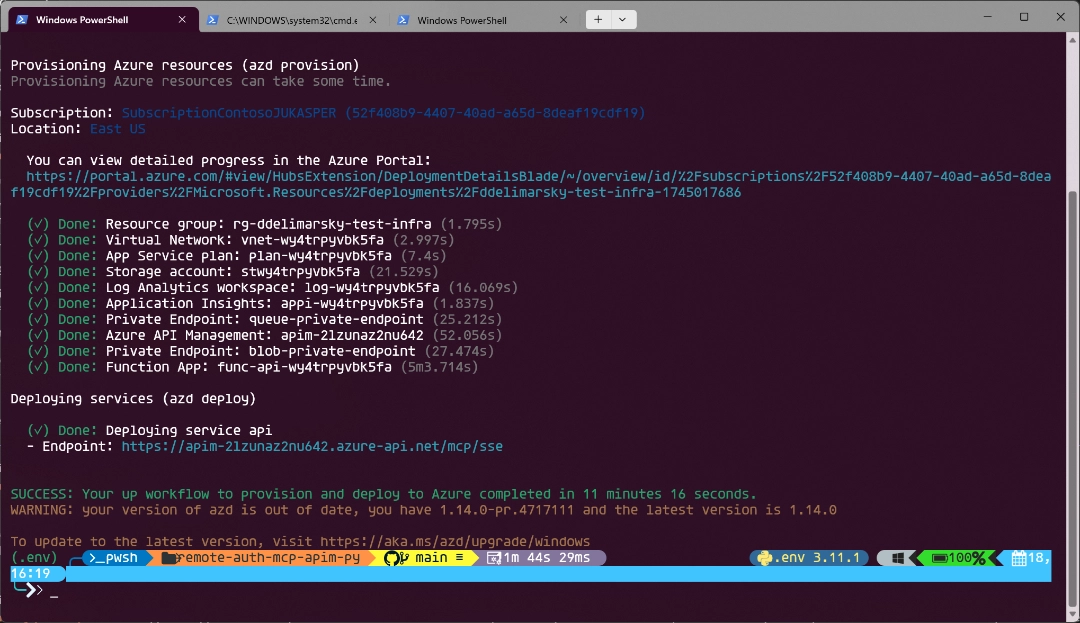

First, we need to run azd up to get the resources declared in the infra directory provisioned in your Azure account. You can go through the existing Bicep files to see what infrastructure will be automatically deployed. As I said above - you can tinker with those to see what you might not need at all.

Once the deployment completes, you will be see the endpoint printed right in the terminal:

For example, in the screenshot above the endpoint is https://apim-2lzunaz2nu642.azure-api.net/mcp/sse. Take note of it, as you will need it shortly.

In your terminal, run:

npx @modelcontextprotocol/[email protected]

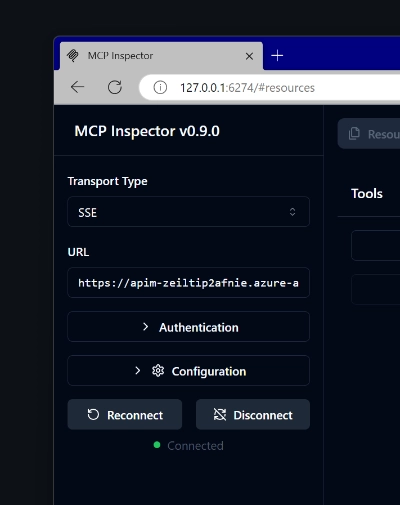

This will give an endpoint where you can see Model Context Protocol Inspector running locally. Open this URL in your browser.

Now, switch the Transport Type to SSE and set the URL to the endpoint that you got from running the deployment, and of course - click Connect.

You will be prompted to authenticate with the credentials in the tenant in which you deployed the Azure infrastructure pieces. The Entra ID applications are dynamically registered at deployment time - one for the server and another that will be used for on-behalf-of flow to acquire Microsoft Graph access.

Once you consent, you will be returned back to the Model Context Protocol Inspector landing page. Wait a few seconds until the connection is established - you will see a green Connected label on the page.

Once connected, click on List Tools and select get_graph_user_details. This will enable you to get data about the currently authenticated user from Microsoft Graph. Click Run Tool to invoke the tool.

If all goes well, you will see your user data in the response block. It should resemble something like this metadata I have from a test tenant:

{

"@odata.context": "https://graph.microsoft.com/v1.0/$metadata#users/$entity",

"businessPhones": [],

"displayName": "YOUR_NAME",

"givenName": null,

"jobTitle": null,

"mail": "YOUR_EMAIL",

"mobilePhone": null,

"officeLocation": null,

"preferredLanguage": null,

"surname": null,

"userPrincipalName": "YOUR_UPN",

"id": "c6b77314-c0ec-44b2-b0bb-2c971a753f0c",

"success": true

}

Congratulations, you now know how to deploy MCP servers that are properly protected by Entra ID! I think this is kind of cool, especially for enterprise scenarios where there is a desire to use established third-party identity providers (like, cough, Entra ID, cough).